Project Leader: Dr. Piotr Przymus

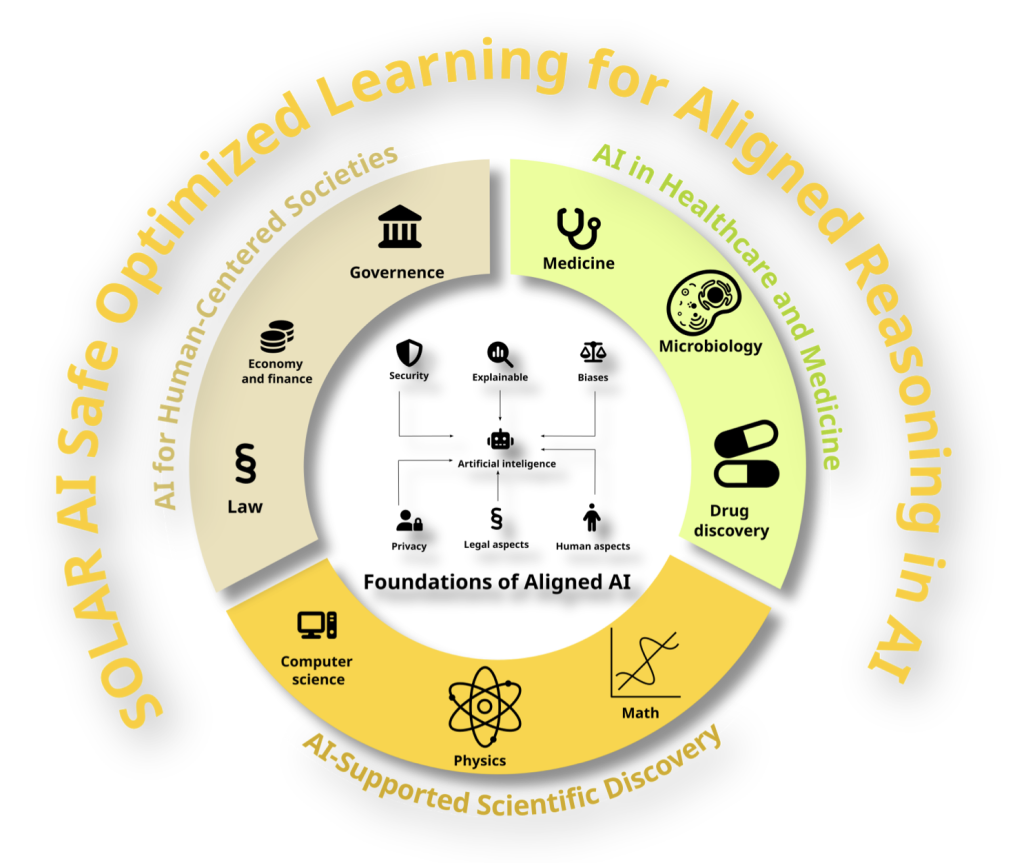

SOLAR-AI (Safe Optimized Learning for Aligned Reasoning in AI) is an interdisciplinary research project aimed at developing safe, interpretable artificial intelligence systems aligned with established societal, scientific, and medical values. The project assumes that aligning AI with values and norms is context-dependent, and that expectations for AI systems differ across these three domains.

SOLAR-AI combines fundamental research with practical implementations, creating solutions that support decision-making and accelerate scientific discoveries while ensuring trust, safety, and ethical standards. Methods for value learning, explainability, bias mitigation, and decision-making under uncertainty are being developed, forming the foundation for practical applications.

In society, AI supports decisions in areas such as law, finance, and management, providing interpretability and compliance with legal and ethical norms. In science—for example, in physics, mathematics, and computer science—it aids modeling and hypothesis generation, ensuring accuracy and reproducibility of results. In medicine, AI operates safely, transparently, and accountably, adhering to ethical principles and medical standards, particularly in decisions affecting patient health and dignity.

SOLAR-AI provides a framework for developing AI systems that take into account the specific values and risks relevant to each domain.